Efficient Docker build and cache re-use with SSH Docker daemon

Working with Docker for 8+ years I've seen many teams struggling with build process optimization - mostly around CI config for efficient cache re-use. I was surprised very little literature mention that a Docker daemon can be configured as a remotely available server, allowing for a de-facto secure remote cache and build engine.

I've been using this pattern for some time in various teams and projects with great results. Coupled with a simple Dockerfile optimization (and BuildKit cache mount when needed), Docker builds are mostly smooth and painless. It also has the advantage of avoiding overcomplex CI config to optimize cache re-use and vendor-specific cache features.

Reminder: Docker architecture and basic build optimization

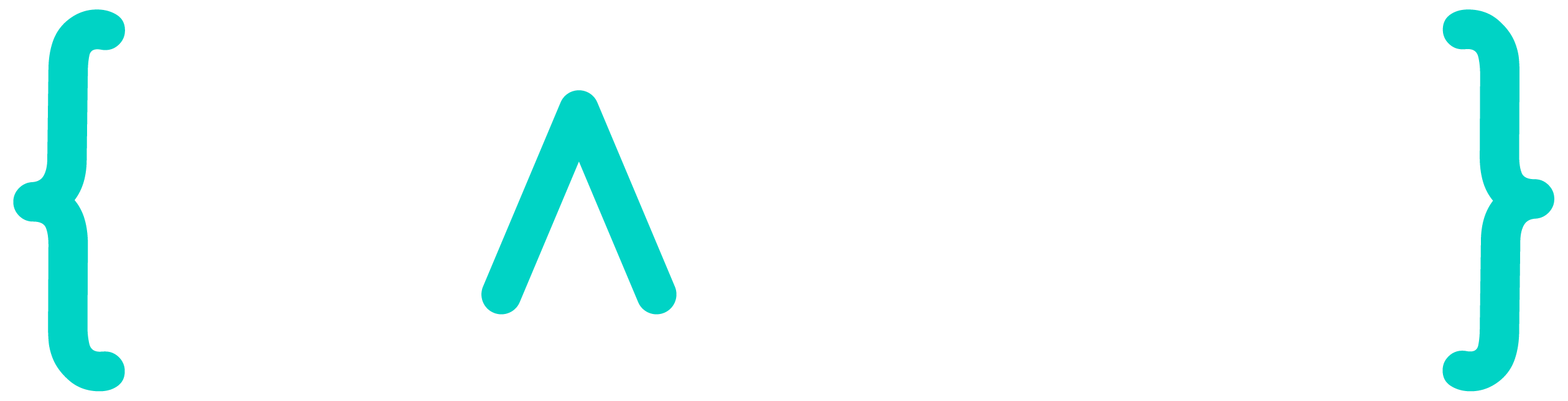

If you're reading this, you probably went through some optimization of your Docker image build proces. You know Docker architecture basics and understand that the Docker daemon is a server which can run locally or remotely. The docker CLI is just a client interacting via REST calls.

When you build a Docker image with a command such as:

docker build . -t myapp:latestYou're sending build context from current directory to Docker daemon. Daemon starts a build container in which it executes your Dockerfile instructions, and create an image from result.

You may already have applied:

- Dockerfile best practices

- Docker build cache optimizations

- Especially

--mount=type=cache,target=/..., very powerful when installing packages withapt,(p)npm,pipor such

- Especially

If not, it's better to start from there before going further 😉

Docker build and cache optimization: the Good, the Bad and the Ugly

Running Docker build in CI environments is not so easy. After carefully crafting your Dockerfile and CI config your build job still takes several minutes even with full cache reuse.

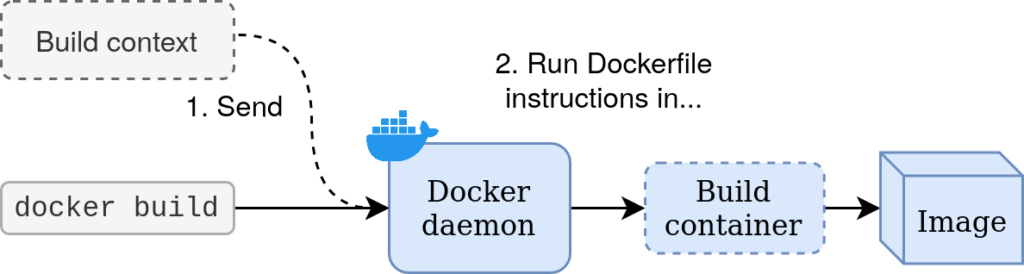

Why? Probably because starting a DinD container, pulling/pushing cache, etc. takes precious time, whereas your build takes 3 seconds locally. And if the layer building your app or downloading dependencies gets invalidated, you're in for the long haul...

A few common solutions exists to help the pain away:

- Use

docker pullanddocker build --cache-from=...to re-use cache from specified images, such as explained on this article and countless others. - Play with your CI system cache features, most often relying on automated push/pull of Docker cache. It's often limited:

- You can't leverage BuildKit

RUN --mount=type=cacheto keep cache volumes around - You'll loose precious time pushing/pulling additional data

- There's probably a maximum allowed cache size

- You can't leverage BuildKit

- Use a static Docker daemon or daemonless build tools (such as Kaniko, img or Buildah)

- Most often with Kubernetes and smart volume management, or by mounting Docker socket from underlying machine (not very secure...)

- Efficiency-wize this method is probably amongst the best solutions I've seen after almost 10 years building Docker / OCI images, but it requires complex setup on both infra and CI job config. You have to either deploy your CI runner/agent around these tooling or craft complex build instructions most developers can't (or won't) do by themselves, relying on a Docker or DevOps expert.

These patterns are often limited:

- Security trade-off (especially exposing underlying host Docker daemon, allowing easy root escalation)

- Config complexity

- DinD service spin-up time

- Cache and layer push/pull time

Docker build setup: what do we want?

For an efficient Docker build setup, we'll want:

- Efficiency and scalability

- Whatever CI runner/agent we're running our build from, we want to leverage cache without complex setup on client's side

- Don't lose time with push/pull of cached volume/data, image layers, etc.

- Don't wait for some ephemeral Docker daemon (DinD) to start at the beginning of every job

- Developer experience

- Developers have a hard time configuring their CI system, often requiring tons of complex setup. Let's have them use a simple

docker buildinstead. - Ideally a CI job would consist of

docker build -t myapp:latest . && docker push myapp:latest, end of story.

- Developers have a hard time configuring their CI system, often requiring tons of complex setup. Let's have them use a simple

- Security

- Restrict who can access our Docker builder, and secure it properly

- Prevent root escalation on CI runner/agents (I'm looking at you,

/var/run/docker.sockmounted from host)

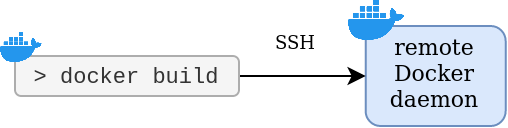

Remote Docker daemon reachable via SSH

Let's deploy a Docker daemon reachable securely via SSH. Our daemon and all its data will remain statically available, allowing efficient build and cache re-use.

Why SSH Docker daemon helps with build optimization?

Our SSH Docker daemon has several advantages:

- Cached layers are re-used automatically

- As cached layer remain stored on Docker server, you won't have to configure cache download / pull at the beginning of your job.

- No need to configure complex a bunch of

--cache-fromand other flags ondocker build

- Always up and running

- You can use it instantanouesly as if it was running locally.

- You don't have to wait for an ephemeral DinD to start every job.

- BuildKit volumes are kept between builds

- As your builds will run on the same Docker darmon, you can use

--mount=type=cacheto leverage BuildKit cache mount without additionel config

- As your builds will run on the same Docker darmon, you can use

- Easy to secure

- Any developer knows (or can learn) how to use SSH

- Thanks to Rootless Docker daemon you can prevent root escalation

- Ease of use for developers.

- Simply use

docker build, the cache from previous build will be used automatically as it never left our static daemon

- Simply use

In short, you can run Docker builds from anywhere with guaranteed full cache re-use and efficient build optimization. All you need is a private SSH key to access the SSH Docker daemon.

Deploy SSH Docker daemon

Let's deploy our SSH Docker daemon on host DOCKER_HOST=dockerd.crafteo.io.

➡️ See full example on GitHub (and showcase GitLab project and job)

Requirements:

- Our Docker Server reachable via SSH

- An additional open port 2222 dedicated to our Docker daemon (it will be reachable via

ssh://docker_host:2222) dockerCLI available on Docker Server

Rather than setting-up complex OS packages, let's use Docker Compose to deploy an SSH server and our Docker daemon:

-

Create a

docker-compose.ymlsuch as:version: "3.9" services: # SSH server # See https://hub.docker.com/r/linuxserver/openssh-server sshd: image: linuxserver/openssh-server:amd64-version-9.0_p1-r2 environment: # Run as user with these UID/GID # Allowing connection via user@host PUID: 1000 PGID: 1000 USER_NAME: user # Allow access from this SSH key # Also possible to use PUBLIC_KEY_FILE or PUBLIC_KEY_DIR PUBLIC_KEY: "ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIDoqcE6AKVXlirhmMuEWYbWZC3jA7jYzkhRuqDfPzCqS" ports: - 2222:2222 networks: - dockerd-ssh-network volumes: - docker-certs-client:/certs/client:ro - $PWD/openssh-init.sh:/custom-cont-init.d/openssh-init.sh:ro # Rootless Docker daemon # Reachable from sshd container via tcp://docker:2376 # See https://hub.docker.com/_/docker # # Note: 'docker' service name is important as entrypoint # will generate TLS certificates valid for 'docker' hostname so it must match # the external hostname. Alternatively, use hostname: docker docker: image: docker:23.0.0-dind-rootless privileged: true networks: - dockerd-ssh-network volumes: - docker-data:/var/lib/docker - docker-certs:/certs - docker-certs-client:/certs/client volumes: # Keep Docker data in volume docker-data: # Keep auto-generated Docker TLS certificates in volumes docker-certs: docker-certs-client: # Let's have our setup in its own network # (better security if other containers are running on same machine) networks: dockerd-ssh-network: name: dockerd-ssh-network - ⚠️ Make sure to replace

PUBLIC_KEYwith your public SSH key -

Create file

openssh-init.shinitializing ouropensshserver:#!/bin/bash # set -e # This script will run on openssh-server container startup to install Docker client # and setup env variables allowing to reach DinD container # This ensures SSH session on openssh-server container will reach our DinD container seamlessly apk update && apk add docker-cli echo "SetEnv" \ "DOCKER_HOST=tcp://docker:2376" \ "DOCKER_CERT_PATH=/certs/client" \ "DOCKER_TLS_VERIFY=1" \ >> /etc/ssh/sshd_config - Run our stack:

docker-compose up -d

Our SSH Docker daemon is ready !

Use SSH Docker daemon

To use our SSH Docker daemon on client side we must simply have SSH access configure and specify the Docker host we want to join, for example:

export DOCKER_HOST=ssh://user@dockerd.crafteo.io

docker build . # Will se our SSH Docker hostDOCKER_HOST env var is not the only way. We can:

- Specify our SSH Docker daemon as Docker host by either:

- Setting environment variable

export DOCKER_HOST=ssh://user@dockerd.crafteo.io - Creating a Docker context

docker context create --docker "host=ssh://user@dockerd.crafteo.io" dockerd-ssh docker context use dockerd-ssh

- Setting environment variable

- Configure SSH to use our private key, by either

- Let SSH use default location

~/.ssh/id_rsa(no specific setup) - Setting custom

~/.ssh/configconfig such as:HostName dockerd.crafteo.io User user IdentityFile /path/to/private_key StrictHostKeyChecking no - Setting-up SSH agent

- Let SSH use default location

What happened?

Upon calling docker build from our local machine,our Docker client literally SSHed into the Docker server and run another docker build command. It's similar to run:

ssh user@dockerd.crafteo.io docker build ...But everything is done by our local Docker client.

Full example: GitLabCI setup

Let's configure a GitLab CI Runner for our SSH Docker daemon. See Install GitLab Runner for basic setup.

➡️ See full example on GitHub (and showcase GitLab project and job)

Remember: we want easy usage for developers with our Docker daemon. The best way to do that is to provide a runner/agent environment configured to use our SSH Docker daemon by default, so that a developer configuring CI jobs just have to run docker build.

Set config.toml such as:

[[runners]]

name = "SSH Docker Daemon Runner"

url = "https://gitlab.com/"

executor = "docker"

token = "xxx"

# Set DOCKER_HOST for all jobs running for this runner

# So that docker CLI will always use that host by default

environment = [

"DOCKER_HOST=ssh://user@dockerd.crafteo.io"

]

[runners.docker]

# Mount global SSH config and private key in job's container

# This will allow script to use ssh commands with our SSH Docker daemon without additional config

# /etc/ssh/ssh_config is the default system-wide ssh config, so whatever the user in container it should pick-it up

volumes = [

"/path/to/ssh_config:/etc/ssh/ssh_config",

"/path/to/dockerd_id_rsa:/dockerd_id_rsa"

]Make sure these files are on the runner host as they are used by our runner:

/path/to/dockerd_id_rsa: SSH private key to access SSH Docker daemon/path/to/ssh_config: SSH config to use our SSH key automatically, such as:HostName dockerd.crafteo.io User docker IdentityFile /path/to/dockerd_id_rsa StrictHostKeyChecking no

Register our runner with dockerd-ssh tag and we're done !

How does it work?

- GitLab will create a Docker container, mount our SSH key & config and set

DOCKER_HOSTvariable before running job's script. - Any

dockercommand in our CI job will useDOCKER_HOSTand our SSH config.

Now our developers can simply setup their .gitlab-ci.yml such as:

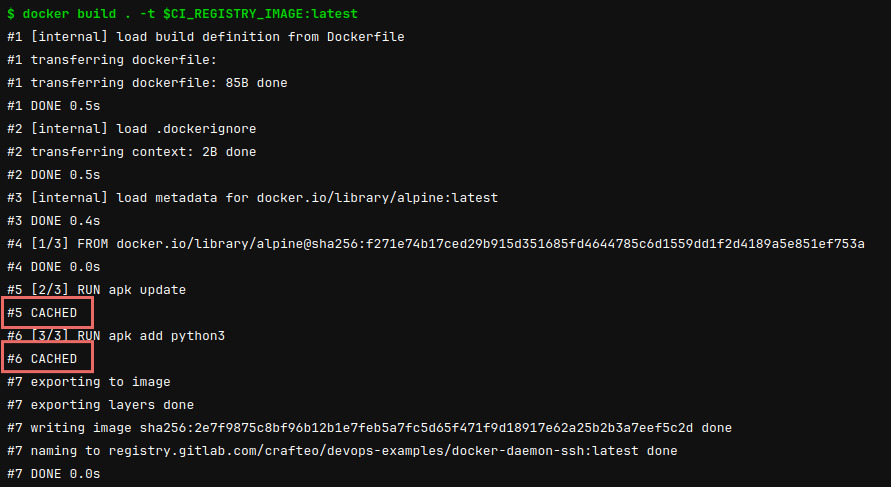

docker-build:

stage: build

tags: [ dockerd-ssh ]

image: docker

script:

- docker login -u "$CI_REGISTRY_USER" -p "$CI_REGISTRY_PASSWORD" $CI_REGISTRY

- docker build . -t $CI_REGISTRY_IMAGE:latest

- docker push $CI_REGISTRY_IMAGE:latestWe can see with resulting job re-use cache without additional config:

There's not additional config required to re-use cached layers or BuildKit cache mounts: the same Docker daemon will be used for each builds and data will be kept across builds. Docker will automatically hit cache where possible.

➡️ See full example on GitHub (and showcase GitLab project and job)

Wait, didn't you say "easy" to secure and use ? That seems overly complex !

This setup is indeed a bit complex. In fact we just moved complexity away from developers: instead of having to play with complex build arguments and CI config, everything "hard" is ported by infra. Developers and other users just have to use our SSH Docker daemon by providing SSH key.

A few things to consider:

- Such setup is great to move from "somewhat good cache re-use" to "very efficient cache reuse". If you're good with your current setup, it may not be worth the trouble

- We may simplify setup by using a single Docker image containing both SSH and Docker daemon (though such image may be complex to create, we'll explore this matter in another post)

- It might be considered easier to configure SSH keys on job level rather than on runner level

Conclusion

SSH Docker daemon is a bit complex to setup but easy to use and provide a more efficient cache re-use than most patterns. It's probably worth it to give it a try !