Cleaning-up 7TB of data from our on-prem GitLab Container Registry

GitLab Container Registry allows developers to manage container images per project via one or more Container Repositories. As storage size increase, so will cost 💸 and you'll want to cleanup your Container Repositories.

Easier said than done, here's a story of how it went with our on-prem GitLab instance.

Automated cleanup policy will be enough... right?

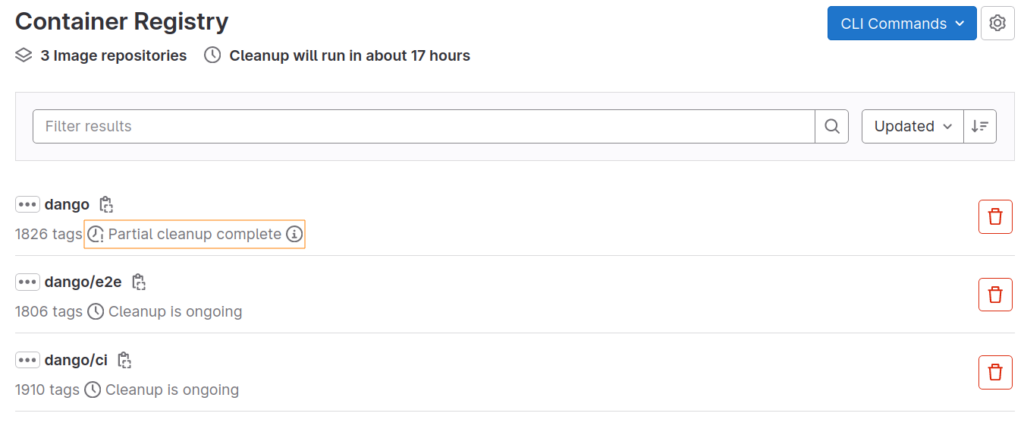

Container Registry comes with automated cleanup policy. We configured policies for our project and promptly forgot about it, thinking it would be enough. A few months later we were notified about "lots of Docker tags" in a project's Container Repository. Indeed, said project had 1800+ image tags across multiple Repositories and it kept growing. Most tags should have been deleted by cleanup policy a long time ago... Strange.

Turns out that our GitLab instance Container Registry cleanup was slow. Very slow. Only a few hundred tags were deleted every day, but we were pushing tags faster than that so our Container Repositories kept growing! In fact GitLab UI shows a small warning about Partial cleanup complete but it's easy to miss.

It's possible to configure Container Registry cleanup workers and maximum number of tags to be deleted in the Admin area, but it may not be sufficient. Cleanup may still be slow, and what if you don't have access to admin area? Instead, I wrote a small Container Registry cleanup CLI to run cleanup more agressively using GitLab REST API.

# Clean all tags except releases from Container Repository 161

gitlab-container-registry-cleaner clean 161 -k 'v?[0-9]+\.[0-9]+\.[0-9]+.*' -d '.*' --no-dry-run

# 🔭 Listing 1564 tags (32 pages, 50 / page)

# [...]

# Found 1462 tags matching '.*' but not matching 'v?[0-9]+\.[0-9]+\.[0-9]+.*'

# 💀 Found 1042 tags to delete

# 🔥 Deleting 1042 tags

# [...]

# ✅ Deleted 1042 tagsCleanup CLI goes:

- List all tags for a Container Repository

- Check if tag matches regex to keep or delete (much like cleanup policy)

- For all tags matching "delete" regex and not "keep" regex, fetch tag details to get creation date (listing tags won't give you creation date)

- Delete all tags both matching regex and older than specified number of days

With concurrent API requests we were able to cleanup our dozen of active projects in <2 min each for about 8k tags - much faster than cleanup policy which was taking hours.

Good now, container images in our Container Repositories were back to an acceptable count. But how about actual storage size and cost?

Cleanup policy and garbage collection

Cleanup policy only remove image tags references - but not the underlying layers and images. It's a bit like deleting a Git branch: commits are not fully deleted but left dangling until you run git gc. In order to really cleanup data, you must run Container Registry garbage collection using gitlab-ctl registry-garbage-collect.

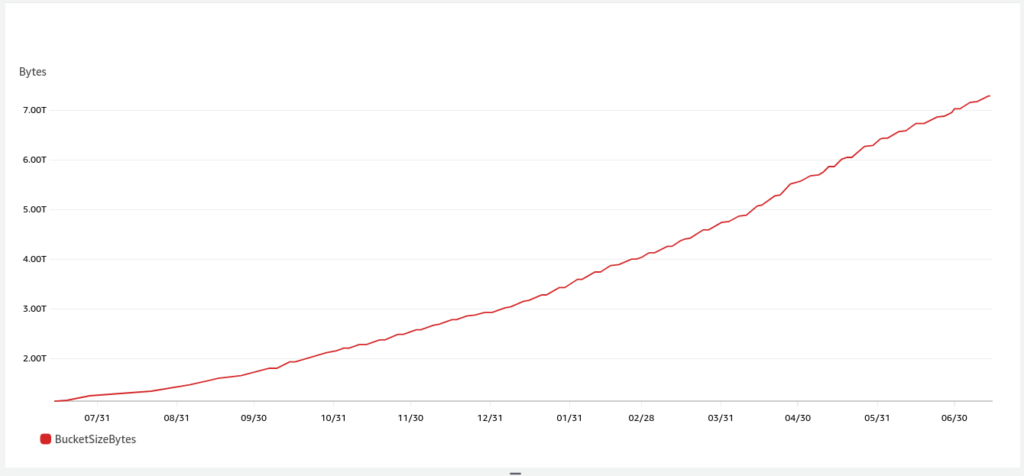

Our S3 storage looked like this after a few months left "ungarbaged". We went from ~500 GB to 7 TB of data in a year, S3 storage and data transfer costs were having a serious impact on our bill 😨

Gargabe collection time ! It requires Container Registry to be stopped or put in read-only mode so we did a "try" run for 2h with Registry in read-only mode to see if it was enough. It wasn't. The process spent the entire 2 hours marking stuff but did not delete anything. We interrupted it and prepared to have it run for a longer time, hoping that this marking phase would end in under 7 hours so it would actually delete things.

Here's a sample log extract of gitlab-ctl registry-garbage-collect:

time="2023-07-21T13:01:00.660Z" level=info msg="marking manifest metadata for repository" digest="sha256: [...]

time="2023-07-21T13:01:03.156Z" level=info msg="marking blob" digest="sha256: [...]We decided to run garbage collection nightly between 23:00 and 6:00 with Container Registry in read-only mode. This way our Production Kubernetes cluster could pull images at night. It went like this:

- I wrote a small script to enable / disable Container Registry read-only mode - let's call it

gitlab-registry-garbage-collect.sh -

I configured cron jobs to start garbage collection at night and stop it in the morning:

# Start garbage collect at night 0 23 * * * /usr/local/sbin/gitlab-registry-garbage-collect.sh start # Stop garbage collect in the morning 0 6 * * * /usr/local/sbin/gitlab-registry-garbage-collect.sh stop

This time garbage collection ran for 2 nights and we were happy to see a sweep phase starting in logs. Apprently garbage collection will first mark every objects for deletion and only then start deleting them. If you have many objects the marking phase is awfully long.

time="2023-07-21T01:10:36.569Z" level=info msg="blob eligible for deletion" digest="sha256: [...]

time="2023-07-21T01:10:36.569Z" level=info msg="mark stage complete" [...]

time="2023-07-21T01:10:36.569Z" level=info msg="starting sweep stage" [...]

time="2023-07-21T01:10:36.569Z" level=info msg="deleting manifest metadata in batches" batch_count=354 batch_max_size=100 [...]

time="2023-07-21T01:10:36.570Z" level=info msg="deleting batch" [...]

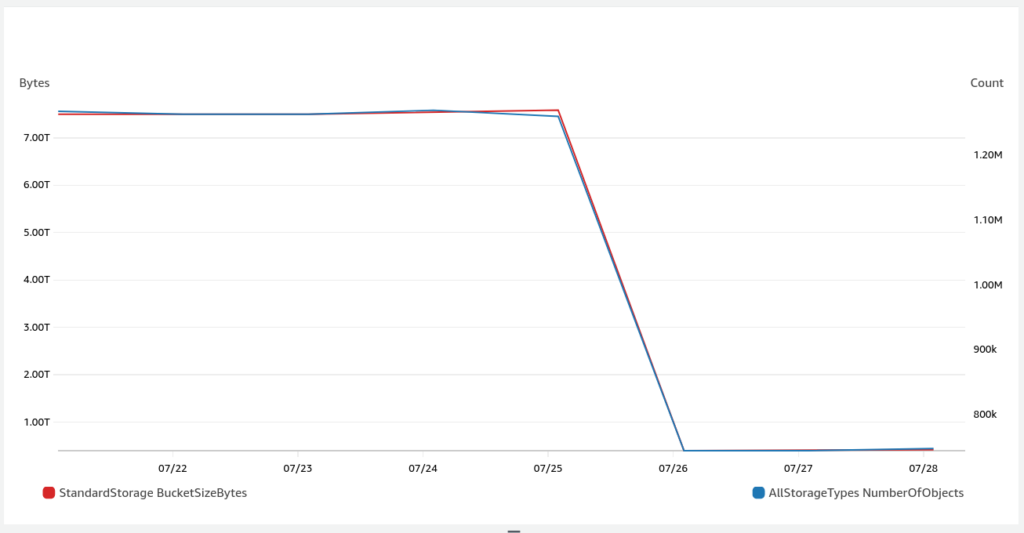

time="2023-07-21T01:10:37.460Z" level=info msg="batch deleted" [...]And here's the result in term of bucket object count for the 2 nights of 22/07 and 23/07 garbage collection ran 🥲 It stopped by itself after a few hours on the second night so it did all it could. No need to say we were a bit frustrated by the result...

Considering new objects were added when some were removed, we can estimate that ~5000 to ~10000 objects were deleted. Out of 1.26 millions. Yay !... Bucket storage size didn't even bulge, result was only visible on object count. Something was wrong, what did we miss?

Forgotten Container Repositories

After a coffee and a bit of reflexion, we thought: what if we haven't cleaned-up some big Container Repositories somewhere? Maybe we missed old/archived repositories or devs personal projects? Unfortunately GitLab does not (yet?) provide UI to list instance-wide Container Repositories, we had to use the API directly.

Rather than list all groups and projects and swipe through data with a complex algorithm, I simply called REST API endpoint Get details of a single repository from range 1-10000 concurrently which was much faster and easier to setup. I implemented this list feature on my Container Registry Cleanup CLI:

gitlab-container-registry-cleaner list all -o /tmp/repositories.jsonThus listing Container Repositories instance-wide, we found out we had:

- 101 Container Repositories. We only cleaned-up a dozen so far, so yeah we missed quite a lot.

- Roughly 7000 image tags left. Our dozen of cleaned-up projects were left with <500 tags, so we had quite a lot hidden somewhere.

From there we started the boring work of:

- Referencing all these Container Repositories and their owners within the company (if any): were they active or not? Could we delete them?

- Deleting Container Repositories we didn't need anymore

- Running Container Registry Cleaner on Container Repositories which were still in use

Within 24h we went down to ~40 mostly empty Container Repositories and less than 500 image tags. Afterward garbage collection ran for a few nights from 23:00 to 6:00. marking phase took up to 4 hours before the blessed sweep phase started. Here's the bucket size and storage graph we obtained:

This time we went from ~7.5 TB of data to ~500 GB, and from 7.26 millions objects to ~750k ! 🥳 We claimed victory, congratulated ourselves and closed our internal issue #2901.

Summary: a process for efficient GitLab Container Registry cleanup

Remove image tags and unused Container Repositories:

- Configure Cleanup policy on your projects.

- You may have forgotten or archived projects with unused Container Repositories. Make sure to identify them.

- GitLab UI does not provide an easy way to list Container Repository instance-wide. Use Container Registry cleanup CLI to get this information easily.

- Cleanup policy runs instance-wide and may be slow. If you have lots of tags to cleanup, your Container Repositories may keep growing indefinitely.

- Tweak cleanup policy configuration in Admin area to have more workers and higher limits.

- Alternatively, use GitLab Container Registry cleanup CLI to cleanup Container Repositories on-demand.

- If you don't use a Container Repository anymore, remember to delete it.

- Cleanup policy and deleting Container Repositories is not enough to delete data: it won't delete underlying container images and layers. You must run Container Registry garbage collection

Run Container Registry garbage collection:

- Container Registry garbage collection will delete dangling images and layers

- Garbage collection runs in 2 phases:

markingandsweep. If you have lots of data,markingphase may take a long time (several hours). Plan accordingly. - You can configure nightly cron jobs to automatically run garbage collection while Container Registry remain available read-only

- Here is an helper script you can use with cron jobs

I hope this helped. Do not hesitate to leave a comment, checkout my other posts or take a look at my GitHub projects

Hey – Great post! I’m a PM at GitLab and was wondering if you’d be interested in an upcoming beta program we will launch that will help folks like you get the latest GitLab container registry. This latest update includes support for online garbage collection and a 200x performance improvement in the cleanup policies. If you are interested, tag me ‘trizzi’ in https://gitlab.com/gitlab-org/gitlab/-/issues/379240.

Sure will, thanks !